Hello! My name’s Ryan and I’m an “undecided” voter.

No, it’s not what you think.

I’m not undecided between these guys:

There’s no way in hell I’m voting for Romney.

I’m not an idiot as Bill Maher not-so-subtly suggested last week. (It’s okay Bill, I can take a joke)

I’m undecided between these guys (and gal):

Mathematician and author John Allen Paulos described the situation a little more elegantly:

As presidential race progresses, the many characterizations of the undecided dwindle to two: unusually thoughtful or unusually ignorant.

— John Allen Paulos (@JohnAllenPaulos) September 9, 2012

I’d like to believe that I fall into the “unusually thoughtful” category and wanted to share my perspective.

FULL DISCLOSURE: This is my personal blog and obviously biased by my opinions. I’m a member of the Green Party and have made a “small value” donation to the Stein campaign. Despite my party membership, I try to vote based on the issues and not the party. I voted for Obama in 2008 and voted for Ron Paul in the 2012 GOP primary. While I’m not technically an “independent” due to my affiliation with the Greens, I’m probably about as close to one as it gets.

Let’s start with a little historical background and work our way forward from there.

The Nader Effect

My first voting experience was in the 2000 election. I didn’t like either Gore or Bush, and ended up gravitating towards the Nader campaign. His positions on the issues most closely aligned with my own, so I did what seemed like the most rational thing to do at the time. I voted for him.

After the election, Nader (and the Green Party in general) received a large amount of criticism from Democrats for “spoiling” the election. The Democrats argued that votes cast for Nader in key states like Florida, would have been otherwise been cast for Gore. The counter argument is that Bush v. Gore was decided by the Supreme Court, but I won’t get into that.

From my perspective, my vote for Nader in this election could not be counted as a “spoiler”. I was living in California at the time, and the odds of California’s votes in the Electoral College going to Bush in the 2000 were negligible. My vote for Nader was completely “safe” and allowed me to voice my opinion about the issues I cared about. However, this notion of a “spoiler vote” forever changed how I thought about my voting strategy.

Independence of Irrelevant Alternatives

In the 1950s, economist Kenneth Arrow conducted a mathematical analysis of several voting systems. The result, now known as Arrow’s Impossibility Theorem, proved that there was not ranked voting system that could satisfy the following conditions for a “fair” election system:

- It accounts for the preferences of multiple voters, rather than a single individual

- It accounts for all preferences among all voters

- Adding additional choices should not affect the outcome

- An individual should never hurt the chances of an outcome by rating it higher

- Every possible societal preference should be achievable by some combination of individual votes

- If every individual prefers a certain option, the overall result should reflect this

Arrow was largely concerned with ranked voting systems, such as Instant Run-off Voting, and proved that no such ranking system could ever satisfy all of these conditions. There are non-ranked voting systems that meet most of these conditions, such as score voting, but one of these conditions of interest that our present system doesn’t meet is number 3. This condition goes by the technical name of Independence of irrelevant alternatives. The idea is that the outcome of a vote should not be affected by the inclusion of additional candidates. In other words, there should never be a “spoiler effect”.

What I find interesting here is that the very mechanics of our voting system lead to a situation where the outcome of elections is controlled by a two party system. It forces citizen to vote tactically for the “lesser of two evils”, while from my perspective both of those “evils” have gotten progressively worse. George Washington warned of this outcome in his farewell address:

However [political parties] may now and then answer popular ends, they are likely in the course of time and things, to become potent engines, by which cunning, ambitious, and unprincipled men will be enabled to subvert the power of the people and to usurp for themselves the reins of government, destroying afterwards the very engines which have lifted them to unjust dominion.

Until we can address the issues inherent in our voting system itself, I’m left with no choice but to vote strategically in the election. My policy for voting is a tactic of minimaxing: minimizing the potential harm while maximizing the potential gain. It’s with this strategy in mind that I turn to the options of the 2012 presidential race.

Quantifying Politics

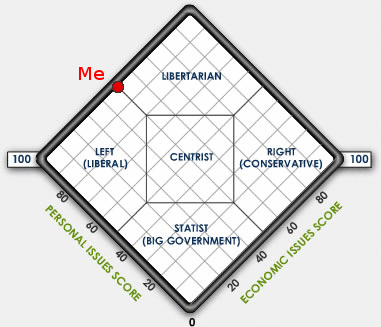

In order to apply a mathematical analysis to voting, it is first necessary to have some way of quantifying political preferences. As a method of during so, I’ll turn to the so called Nolan Chart. An easy way to find out where you stand on the Nolan Chart is the World’s Smallest Political Quiz. Here’s where it places me:

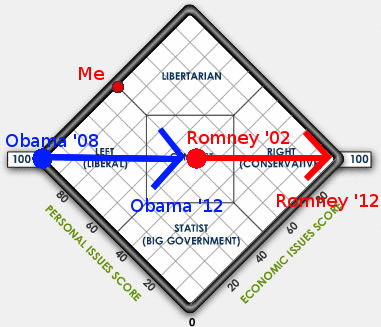

Here’s where I’d place the 2012 candidates:

Note that this is my subjective opinion and may not necessarily reflect the opinions of the candidates themselves. It’s also important to note that this is a simplified model of political disposition. There are other models, such as the Vosem (восемь) Chart that include more than two axes. If you were, for example, include “ecology” as a third axis, this would place me closer to Stein than Obama and closer to Obama than Johnson. The resulting distances to each are going to vary depending on what axes you choose, so I’m just going to stick with the more familiar Nolan Chart.

Since I’m politically equidistant from each of the candidates, my minimax voting strategy would suggest that I vote for the candidate that has the highest chance of winning: Obama. However, there are many more variables to consider that might result in a different outcome. One of those variables is something I call “political flux”.

Political Flux

People change. It’s a well known fact of life. Changes in political opinions are no exception. If you look at the stances that Obama and Romney have made during this campaign, and compare those to their previous positions, I think you’ll see a trend that looks something like this:

Obama campaigned hard left in 2008, but during his term in office his policies have shifted more towards the center. Romney campaigned in the center while he was running for governor of Massachusetts, but has shifted more towards the right during his presidential campaign. These changes are highly concerning to me, because both candidates are shifting away from my position. Thus, while Obama is closer to me on the political spectrum, the fact that he is moving away from my position makes the long term pay-offs lower than they would be if he had “stuck to his guns”. In turn, this makes the 3rd party candidates a more appealing option.

I might even go so far as to suggest that this “political flux” is the reason why these 3rd party candidates are running. Statistically, their odds of winning are too low to change the outcome of the election. However, they can influence the direction of the political discourse. The more people that vote for those candidates, the more likely that future candidates venture in those respective directions. This vote comes at a “risk” though, as those 3rd party candidates run the risk of “spoiling” the election for a less undesirable candidate. The level of this this risk varies from state to state due to the electoral college system.

The Electoral College

A popular vote is not enough to win the election. The president is selected by an Electoral College that gets a number of votes based on a (mostly) population proportional system. For some of these states, the polls predict a pretty solid winner and loser for the presidential race. For others, the state has a tendency to lean right or left. According to The New York Times, the following states are considered a “toss-up” in the upcoming election:

- Colorado

- Florida

- Iowa

- North Carolina

- New Hampshire

- Nevada

- Ohio

- Virginia

- Wisconsin

If you are living in one of these states, the risks of voting for a third party are greater because your vote will have a higher chance of “spoiling” the election for one of the candidates. I happen to live in Virginia — one of the 2012 “battleground” states. I foresee a large number of attack ads in my near future. The big question is, is the pay-off worth the risk?

Aikido Interlude

For the past couple months, I’ve been studying Aikido — a martial art that might be best described as “the way of combining forces”. The idea is to blend ones movements with those of the attacker to redirect the motion of the combined system in a way that neither individual is harmed by the result. As a lowly gokyu, I still have a lot to learn about this art, but I find some of the core principals behind it rather insightful from a physical and mathematical perspective.

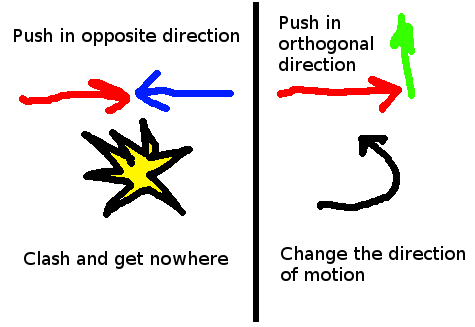

The basic idea is a matter of physics. If an object has a significant amount of momentum, then it takes an equal amount of momentum to stop it. However, if you apply a force that is orthogonal (perpendicular) to the direction of motion, then its relatively easy to change the direction of motion. You don’t block the attack in aikido. You redirect the attack in a way that’s advantageous to your situation. You can see the basic idea in my crude drawing below:

The result of this is that many aikido techniques end up having a “circular” sort of appearance. In reality, it’s the combination of the attacker’s momentum and the orthogonal force applied by the defender that cause this. See if you can spot this in the following video of Yamada sensei:

So what does this have to do with voting?

Well consider my position on the Nolan Chart and the direction that the two major candidates are moving in. As much as I would like to shift the debate to the left, it would require a significant amount of political force and time to negate this momentum towards the right and even longer to push it in the opposite direction. It would be much more efficient to push “north” and allow the momentum to carry the political culture towards my general position.

In other words, voting for Gary Johnson might actually be the path of least resistance to my desired policies.

Metagaming the Election

Here you can start to see my predicament. Part of me wants to vote for Gary Johnson, because I think that doing so would be mostly likely to shift the debate in the direction I want it to go. Part of me wants to vote for Jill Stein, as doing so would help strengthen the political party that I belong to. Part of me wants to vote for Barack Obama, but only because doing so would have the greatest chance of preventing a Romney presidency. According to the latest polling data, the odds of Obama being re-elected are 4:1. Those are pretty good odds, but this is a high stakes game. It sure would be nice if there was a way to “have my cake and eat it too”.

It turns out that there is.

I can metagame the election.

The idea of metagaming, is that it’s possible to apply knowledge from “outside the game” to alter one’s strategy in a way that increases the chance of success. In this case, I’ve decided to employ a strategy of vote pairing.

You see, I live in the same state as my in-laws who traditionally vote Republican. However, despite a history of voting GOP, they’re both very rational people. Romney keeps shooting himself in the foot by saying things that are downright stupid. Screen windows are airplanes? Free health care at the emergency room? The more Romney talks, the easier it becomes to convince rational people that he’s unfit to be president.

After many nights of debate, we’ve come to the realization that we’re only voting for one of the two major parties because the other party is “worse”. From there, a solution presents itself: “I’ll agree to not vote for Barack Obama if you agree to not vote for Mitt Romney”. This agreement is mutually beneficial to both parties involved. Without this agreement, our votes just cancel each other out. With the agreement, the net benefit to each candidate is still zero but now those votes are free to be spent elsewhere. The end result is that we each have a larger impact on the presidential election without altering the outcome.

With the vote pairing secured, I’m free to vote for Stein or Johnson at my own discretion. Both of these candidates agree on what I think is the most important issue: ending our “wars” (of which there are too many to list). They differ on a number of issues, particularly on economics and the environment. Personally, I think that the Greens and Libertarians need to meet half-way on the issues for an Eco-libertarian ticket. Jill Stein needs to recognize that the US Tax Code is a mess and needs reform. Doing so can help eliminate corporate handouts, many of which go to industries that adversely affect public health. Gary Johnson needs to recognize that laissez-faire economic policies alone will not fix our broken health care system or halt the impending climate change. I’m going to be looking forward to seeing debates between Stein and Johnson which I think will highlight the complexities of these issues and hopefully identify some possible solutions.

That’s great, but what can I do?

You can enter a vote pairing agreement with someone of the opposite party. If you would ordinarily vote for the Democrats, you can click here to find out which of your Facebook friends “like” Mitt Romney. If you would ordinarily vote for the Republicans, you can click here to find out which of your Facebook friends “like” Barack Obama. Talk about the issues that are important to you in the race, discuss your objections to the other candidate, and if things go well, agree to both vote for a third party. If everyone did this, one of those 3rd parties might actually win. Even if it doesn’t change the outcome, you’ll know that your vote didn’t “spoil” the election for your second choice.

If you want to go one step further, you can Occupy the CPD. Sign the petition to tell the Commission on Presidential Debates that you think we should hear from all qualified candidates and not just the two that they think we should hear from.

Finally, research the alternative parties and join one that matches your personal beliefs. Even you end up voting for one of the two major parties, joining a 3rd party and supporting that movement can have a significant effect on future campaigns. Here’s a few links to get you started: